Application Architecutre (Monolithic & Microservices)

1 Monolithic

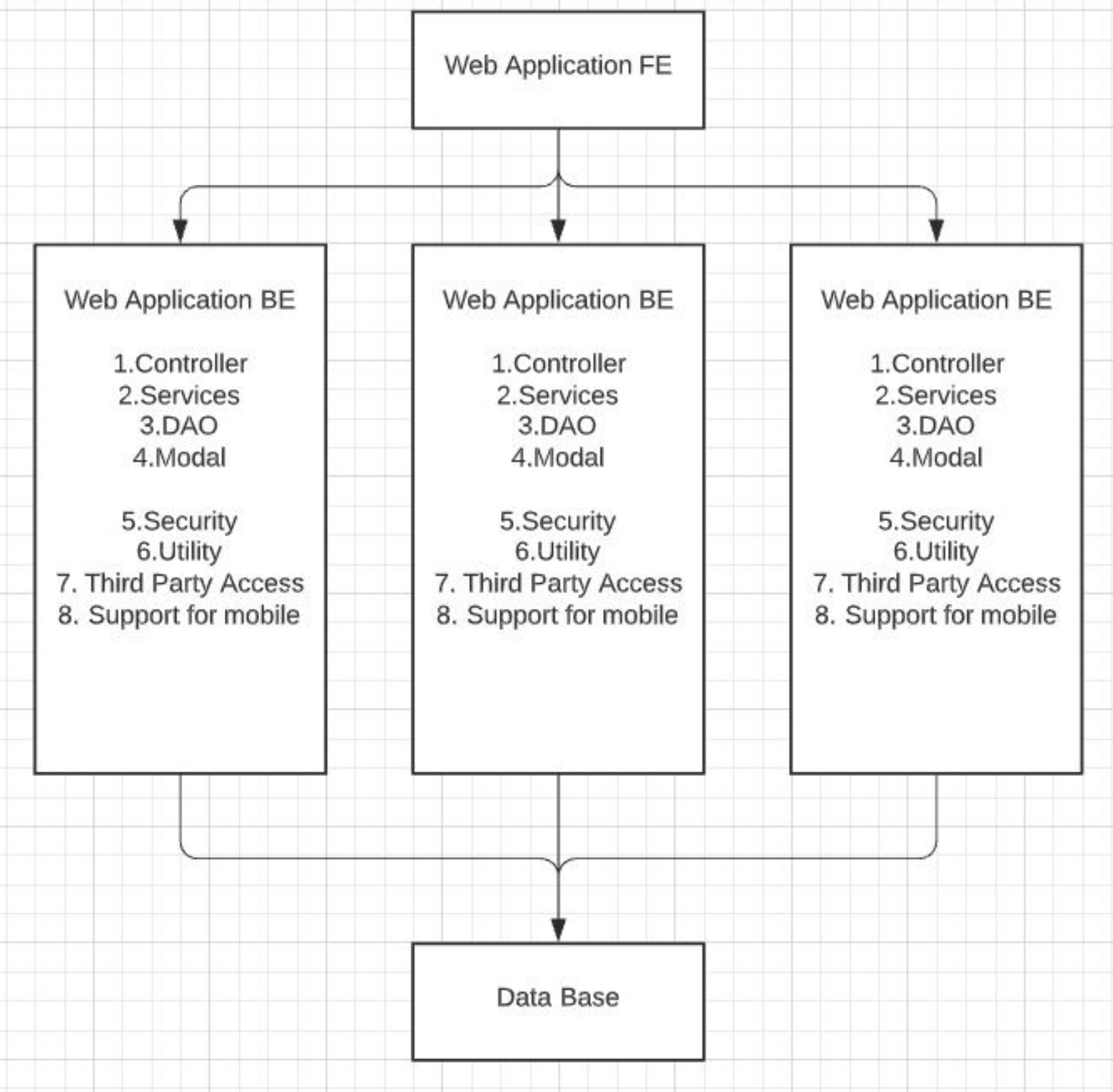

We put everything in one application

This architecture has a number of benefits:

- Simple to develop - the goal of current development tools and IDEs is to support the development of monolithic applications

- Simple to deploy - we simply need to deploy the WAR file (or directory hierarchy) on the appropriate runtime

- Simple to scale - we can scale the application by running multiple copies of the application behind a load balancer

Ways to improve performance

- Multi-Threading

- Offline Processing

But what if the number of requests sent to our application increases and our application reaches its limit?

1.1 Scaling

- Vertical: adding more power to an existing machine. For example, more powerful CPU or more RAM.

- Horizontal: deploying multiple instance of our application.

1.1.1 Vertical (not preferred)

- More powerful machine is more expensive.

- Always have a limit.

- What if the most powerful machine is still not powerful enough?

1.1.2 Horizontal Scaling

- Though cost money, but cheaper than vertical scaling. Multiple normal performance machines are cheaper than one high performance machine.

- We can have as many instances as we want. The limit is the resources needed to make those machines.

How does the client-side application know which server-side instance to request to?

1.2 Loading Balancing

- Load balancing – efficiently distributing incoming network traffic across a group of backend servers.

- Load balancer acts as a traffic distributer sitting in front of all your backend servers.

- It will route your client request to the most appropriate backend server which maximize speed, capacity utilization and ensures no server is over-worked. No impact on the performance.

1.2.1 Load Balancer Scenario

Imagine your web application is a table in a restaurant, and each table is an instance of the application that can serve a customer(handle a request)

The load balancer in this example will be the host that waits at the restaurant’s entrance and leads the customers(request) to an empty table(idling application instance).

The host(loader balancer) can bring up more tables(instances of the application) if the number of customers(requests) hits the current limit.

1.2.2 Load Balancer

- Can be both software and hardware.

- Can be performed on both client side and server side.

- Type: Application, Network, Classic, Gateway(AWS)

- Algorithm: Round-Robin, Weighted Round-Robin…

- Technologies: Nginx, Spring Cloud Netflix Ribbon, Spring Cloud LoadBalancer …

However, once the application becomes too large and the team grows in size, there are problems:

- The large monolithic code base intimidates developers, especially ones who are new to the team

- Overloaded IDE and web container

- Might be scaling up entire application just for one service.

- Take a long time to deploy.

- Requires a long-term commitment to a technology stack (Hard to adopt new technologies)

- One error takes down the entire application.

2 Microservices

2.1 Service Discovery

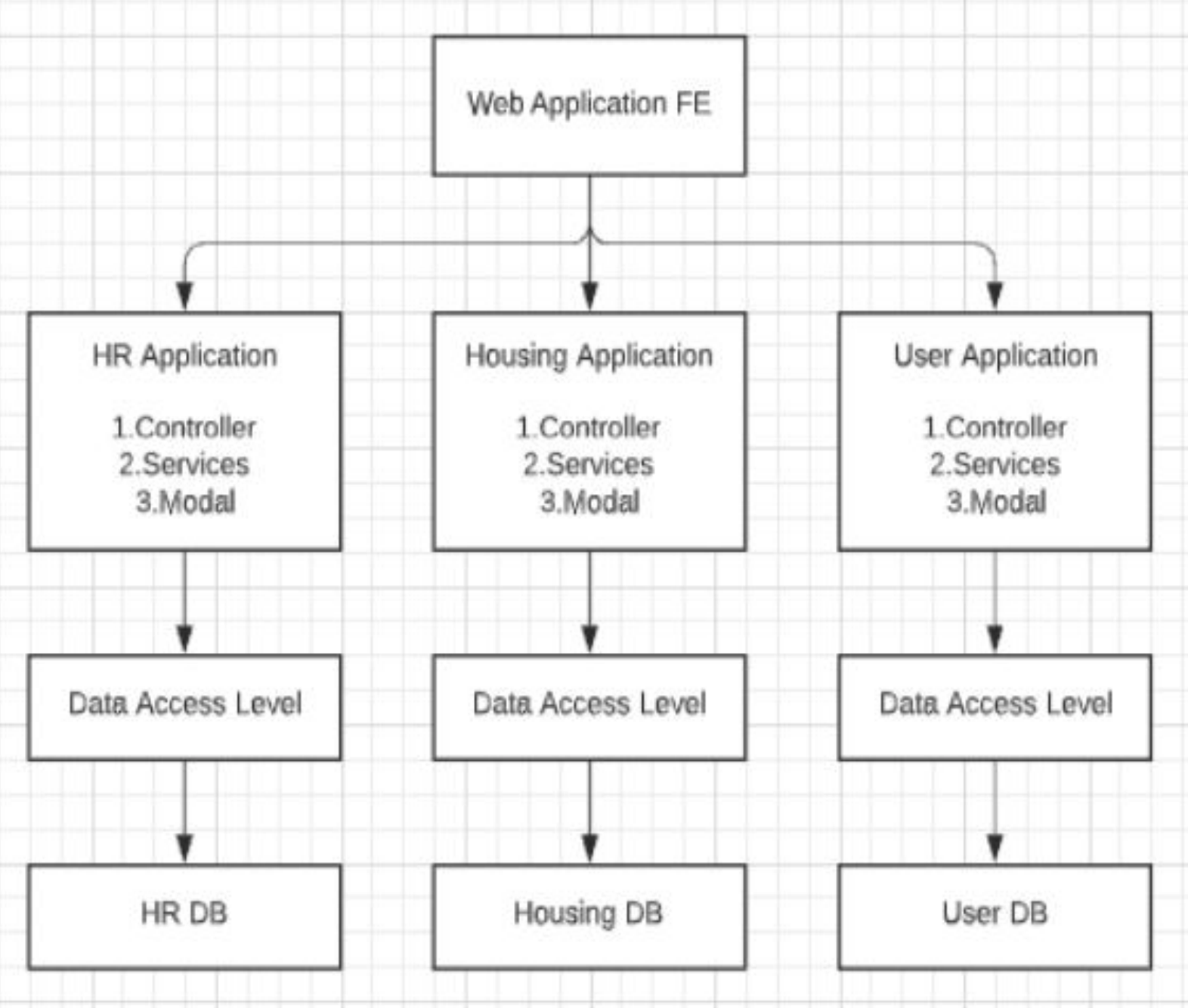

Decouple/split the entire application into different parts

Each service will have its own

- Host name, Port

- Application context

- Entry points

- Database connection

- Technology stack

RESTful Project with Microservices

What if a service needs to communicate with another service?

We keep the locations (hostname and port number) of all services in every service?

We keep the locations of all services in a central place?

2.2 Service Communication

- Service Discovery Serve

- Each service/application should have a way to know where are other services/applications.

- Service Discovery Server allows services to find and communicate with each other without hard-coding hostname and port

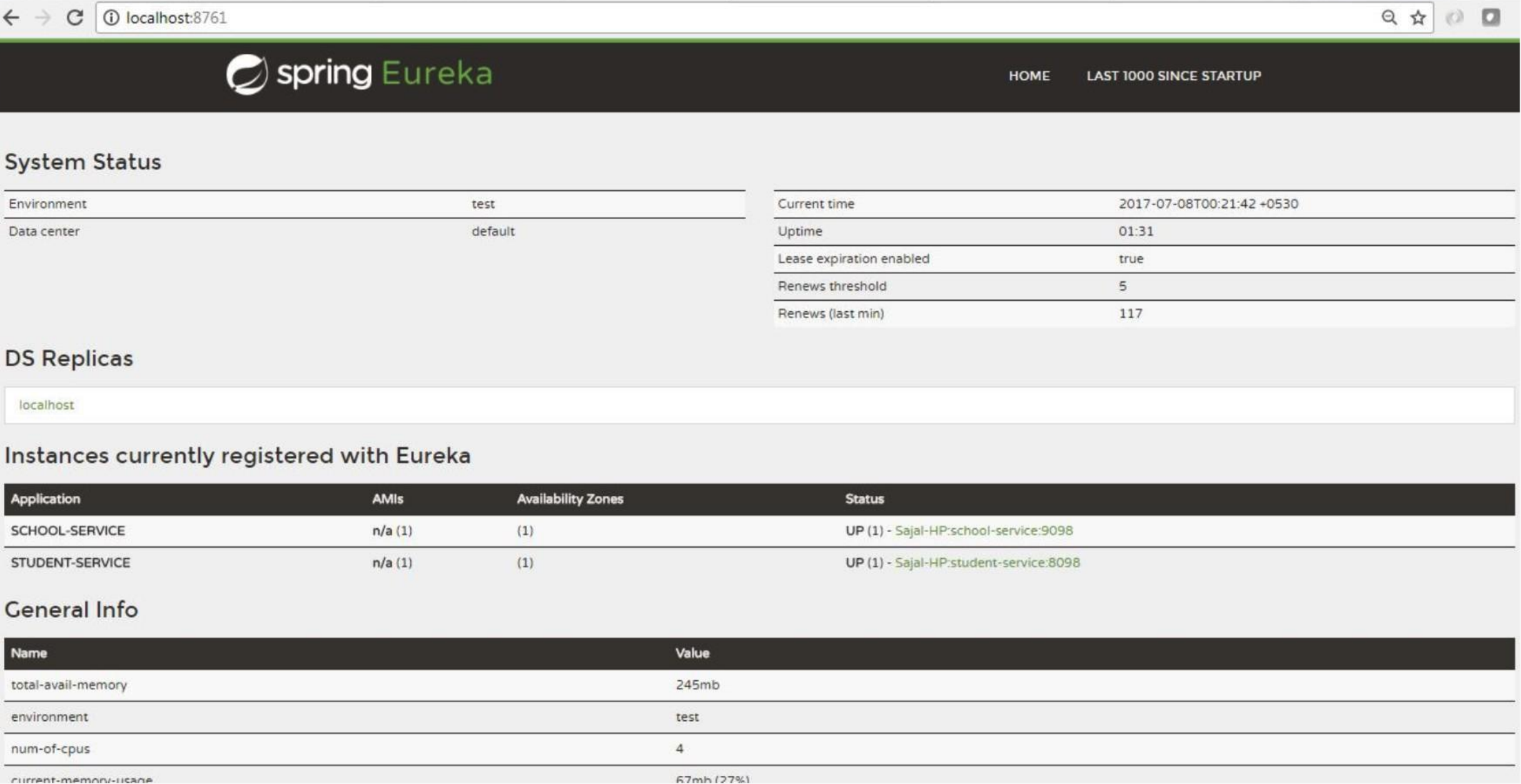

2.2.1 Netflix Eureka

- Netflix Eureka provides such functionality.

- Each service can register itself to a service registry by sending a heartbeat signal to it.

- A service then can retrieves a list of all connected services from the service registry and makes all further requests to any other services.

Creating an Eureka Server (service registry) is very easy

-

Adding spring-cloud-starter-netflix-eureka-server to the dependencies

-

Enable the Eureka Server in a

@SpringBootApplicationby annotating it with@EnableEurekaServer -

Configure some properties (

YMLis an alternative for.properties)

registerWithEurekaandfetchRegistry— we set it to false, since this is our Eureka Server

In order to register our services in the Eureka Server, we have to

-

Add spring-cloud-starter-netflix-eureka-starter to our dependencies

-

Use

@EnableDiscoveryClientor@EnableEurekaClienton configuration or starter -

Configure some properties

spring:

application:

name: spring-cloud-eureka-client

server:

port: 0 eureka:

eureka:

client:

serviceUrl:

defaultZone: ${EUREKA_URI:http: //localhost:8761/eureka}

instance:

preferIpAddress: true

-

registerWithEureka and fetchRegistry are default to true

-

preferIpAddress: the Eureka will register the application by hostname by default. However it is not applicable if we run our application on some Virtual Machines where they don’t register themselves on DNS

2.2.2 Feign

In order to communicate with each others, we need a way to call other services

Feign — a declarative HTTP client developed by Netflix

- Simply put, the developer needs only to declare and annotate an interface while the actual implementation will be provisioned at runtime.

Think of Feign as discovery-aware Spring RestTemplate using interfaces to communicate with endpoints.This interfaces will be automatically implemented at runtime and instead of service-urls, it is using service-names

Without Feign we would have to autowire an instance of EurekaClient into our controller with which we could receive a service-information by service-name as an Application object

2.2.2.1 Without Eureka

We will use the @RequestLine annotation to specify the HTTP verb and a path part as argument, and the parameters will be modeled using the @Param annotation

public interface BookClient {

@RequestLine ("GET /{isbn}")

BookResource findByIsbn(@Param("isbn") String isbn);

@RequestLine ("GET")

List<BookResource> findAll();

@RequestLine ("POST" )

@Headers ("Content-Type: application/json")

void create (Book book);

}

Now we'll use the Feign.builder() to configure our interface-based client.The actual implementation will be provisioned at runtime

BookClient bookClient = Feign.builder()

.client(new OkHttpClient())

.encoder(new GsonEncoder())

.decoder(new GsonDecoder())

.logger(new Slf4jLogger(BookClient.class))

.logLevel(Logger.Level.FULL)

.target(BookClient.class, "http://localhost:8081/api/books");

2.2.2.2 With Eureka

We can specify the services/application name with @FeignClient and use @RequestMapping to specify the URL

@FeignClient ("spring-cloud-eureka-client")

public interface GreetingClient {

@RequestMapping ("/greeting")

String greeting();

}

We also need to provide some properties so that the Eureka Server can find where it is

spring:

application:

name: spring-cloud-eureka-feign-client

server:

port: 8080

eureka:

client:

serviceUrl:

defaultZone: ${EUREKA_URI:http://localhost:8761/eureka}

Differences between Feign, RestTemplate and Messaging Queues:

- Messaging Queues are asynchronous communication, meaning you don’t wait for the response to come back.

- Feign and RestTemplate are synchronous communication, meaning you wait for response to come back.

- Feign is more abstract.

- RestTemplate needs to specify the url in each external api call.

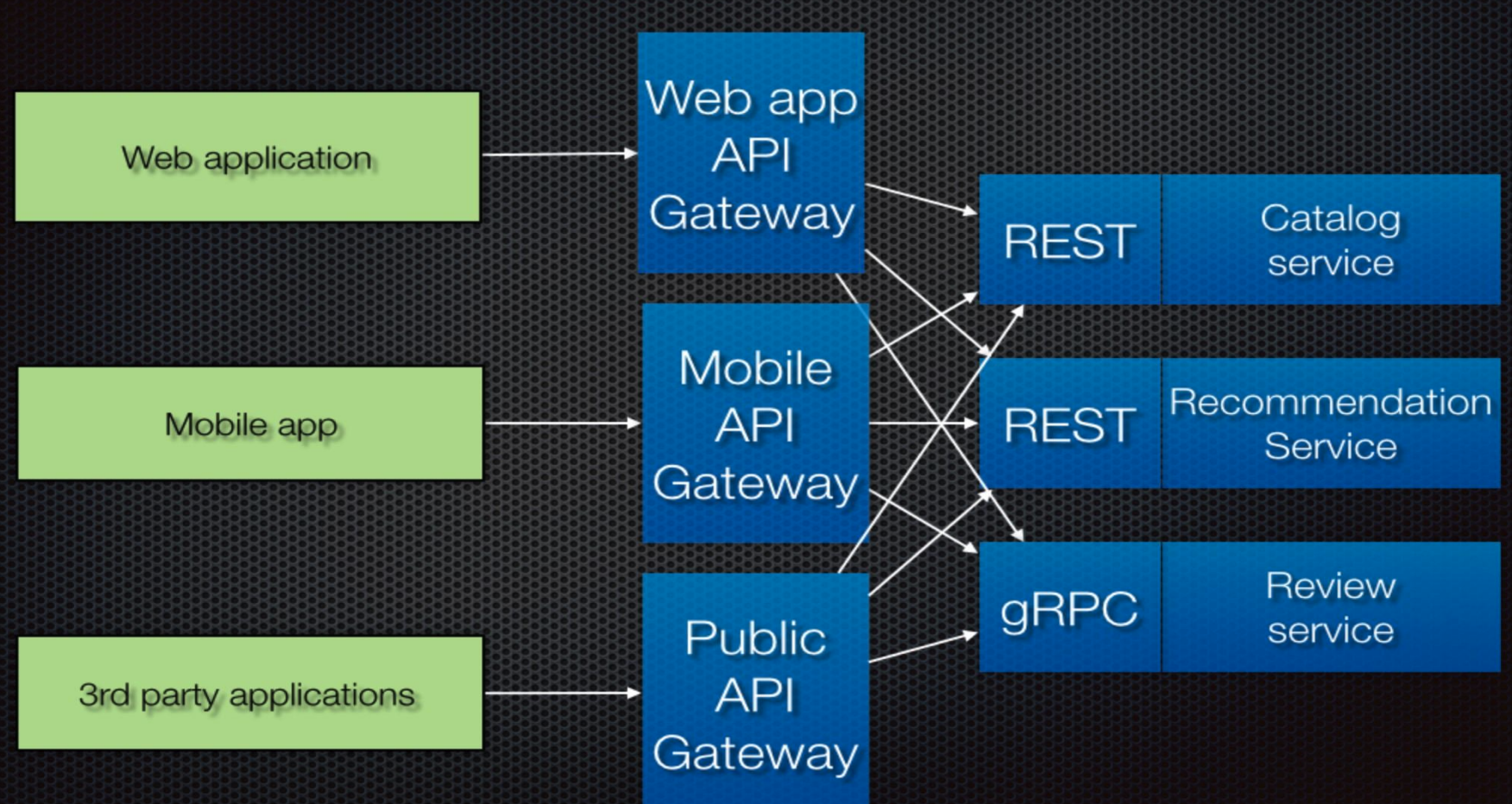

2.3 API Gateway

What if we have 10 services and each service provides 10 entry points, so we will have 100 different URL.

How will the frontend know which endpoint to call for a specific service?

- API gateway is the single entry point for all clients.

- The API gateway handles requests in one of two ways.

- Some requests are simply proxied/routed to the appropriate service.

- It handles other requests by fanning out to multiple services

2.3 Transaction Management in Distributed Systems

https://youtu.be/lTAcCNbJ7KE